What are “Bad Requests”?

Bad requests are the type of HTTP requests that results in “404 Not Found” or “410 Gone” errors. This most commonly happens when user access a broken or dead link and since the webpage does not exists on the server this error message is generated and returned to the user. However, it is possible to have a valid webpage that is working correctly yet it includes one or more “dead” resources. For example, there can be a reference to no longer existing JavaScript file or perhaps CSS file that has been move to a different location yet old link still remains. Sometimes there are images that no longer exists but are included on the page.

How to check for missing resources on the webpage?

The easiest way to check for missing resources on the webpage is to utilize your browser’s developer tools. Most modern browsers come with tool sets that allow to examine network traffic. The common way to access developer tools is to press “F12” button on your keyboard while browsing the webpage. My preferred way to analyze webpage resources is with Firebug which is a developer plugin for Firefox browser.

Why is it important to avoid bad requests?

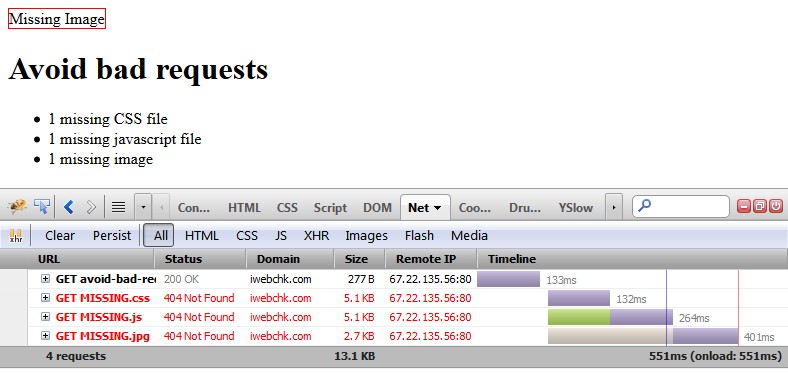

Bad requests are often quite a resource hug. To review the effects of dead resources on a webpage we have created a very simple test page with references to one missing style sheet CSS file, one missing JavaScript file and one bad image:

First noticeable item in the traffic analysis of the page is the size of the 404 No Found responses which are not small in comparison to our tiny test page that is only 277 bytes. Depending on the server and website configuration the size of the error page will vary but it will usually be at least several kilobytes in size as the response usually will consists of headers and text or HTML code with the explanation of the error. If you have a fancy custom 404 error page which is large in size, the difference would be even more dramatic. Removing references to missing resources definitely will decrease bandwidth usage.

The load time of the page is another reason why it is important to avoid bad requests. In our case it only took 133ms to load our small test page but because of the 404 errors the total load time came up to 551ms. This is a whopping 400% increase. Users do not appreciate slow webpages and with Google taking the page speed under consideration for the search ranking, the speed of a webpage is even more important than ever.

Finally, let’s consider number of requests per page. Ideally the number of requests should be kept to minimum to preserver server resources. Each request that results in 404 error causes additional CPU, memory, hard drive and network utilization on the server that could have been avoided. These wasted resources could be better utilized to speed up your webpages.

How to fix bad requests?

There are only two ways to fix bad requests. Either remove references to missing files from the source code of the webpage or make sure that the files exists in the referenced locations.

To sum up, it is very important to avoid bad requests. Bad requests will cause your webpage to be slower, burden your web server with unnecessary work, and even might negatively impact your search engine ranking.