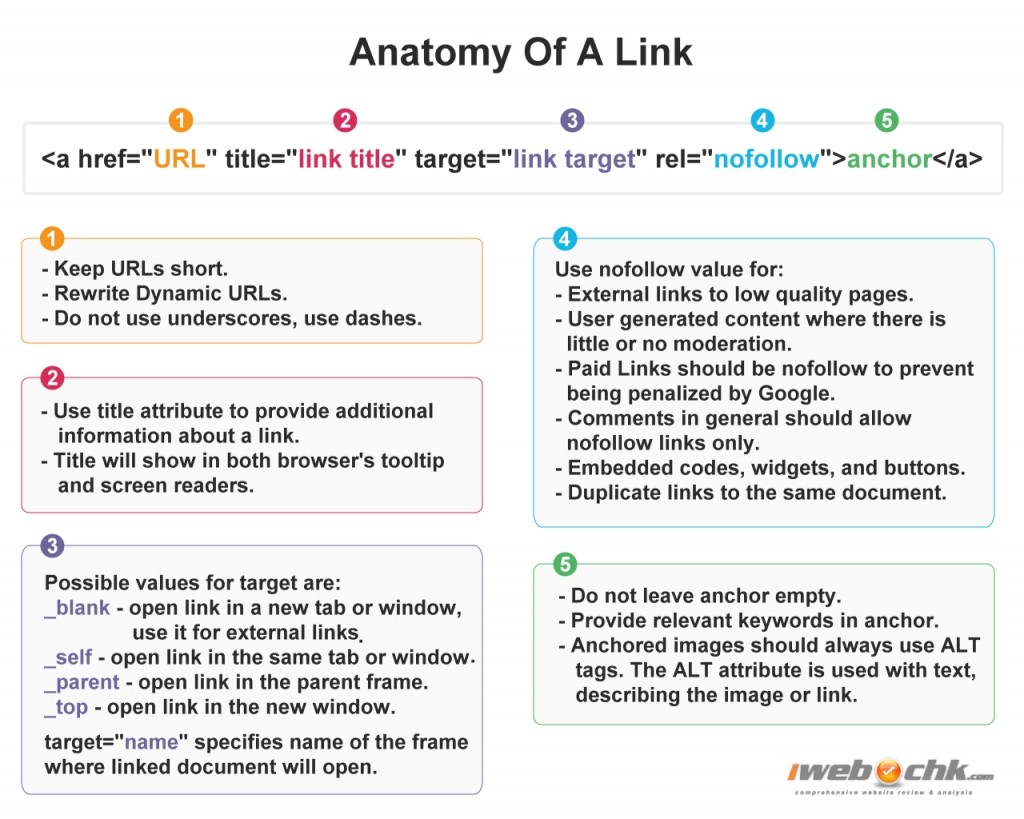

Proper utilization of hyperlinks is an integral part of a good website design and a major component of an effective search engine optimization plan. After all, the World Wide Web (WWW), in its most basic form, is simply a system of documents interconnected through links.

SEO Links Analysis

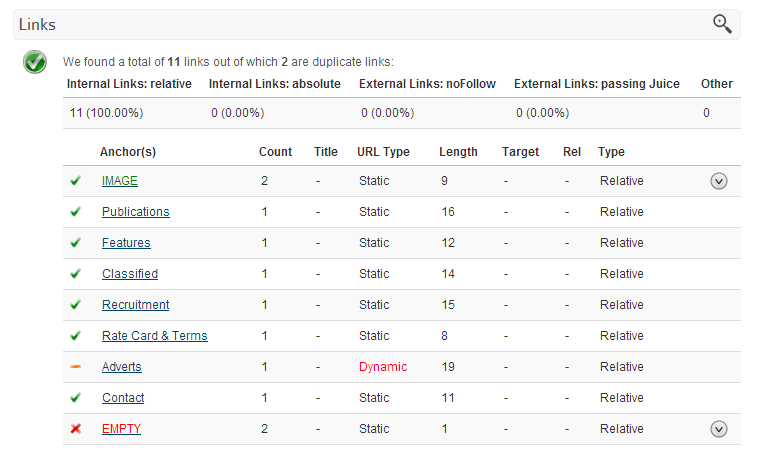

Published to New Features, SEO on .We are excited to announce the launch of a new “Links Analysis” module, the most recent addition to our website analysis tool. It is not a secret that properly functioning and SEO optimized links are an integral part of a good website. The links module provides users with vast amount of valuable information such as number of links on a webpage and their ratios, types, URL structure and much more.

Here is a quick overview of key data points:

- Internal Links: relative – number of internal links with relative URL.

- Internal Links: absolute – number of internal links with absolute URL.

- External Links: noFollow – number of external links that do not pass page rank

- External Links: passing Juice – number of external links passing page rank.

- Anchors – Text or Image that is the anchor part of the links.

- Count – number of links pointing to the same resource.

- Title – shows value of the “TITLE” attribute of a link.

- URL Type – static indicates that the URL is SEO and user friendly. Dynamic indicates complex URL with many parameters in the query string.

- Length – URL length in characters.

- Target – value of the TARGET attribute. If there is no value it is the same as “_self”.

- Rel – value of the REL attribute. Usually “noFollow” value is placed here to limit flow of the page rank

We are hoping you will find link analysis addition helpful in reviews. As always we are looking forward to your comments and ideas for further improvements.

Avoid a Character Set in the Meta Tag

Published to Performance, Technology on .Character sets (charsets) are utilized by browsers to convert information from stream of bytes into readable characters. Each character is represented by a value and each value has assigned corresponding character in a table. There are literally hundreds of the character encoding sets that are in use. Here is a list of just a few common character encoding used on the web ordered by popularity:

- UTF-8 (Unicode) Covers: Worldwide

- ISO-8859-1 (Latin alphabet part 1) Covers: North America, Western Europe, Latin America, the Caribbean, Canada, Africa

- WINDOWS-1252 (Latin I)

- ISO-8859-15 (Latin alphabet part 9) Covers: Similar to ISO 8859-1 but replaces some less common symbols with the euro sign and some other missing characters

- WINDOWS-1251 (Cyrillic)

- ISO-8859-2 (Latin alphabet part 2) Covers: Eastern Europe

- GB2312 (Chinese Simplified)

- WINDOWS-1253 (Greek)

- WINDOWS-1250 (Central Europe)

- US-ASCII (basic English)

Note that popularity of particular charsets greatly depends on the geographical region. You can find all names for character encodings in the IANA registry.

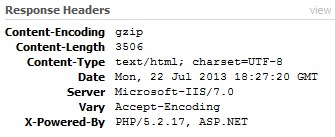

As you can see there are multiple possibilities to choose from therefore character encoding information should always be specified in the HTTP Content-Type response headers send together with the document. Without specifying charset you risk that characters in your document will be incorrectly interpreted and displayed.

In Hypertext Transfer Protocol (HTTP) a header is simply a part of the message containing additional text fields that are send from or to the server. When browsers request a webpage, in addition to the HTML source code of a webpage the web server also sends fields containing various metadata describing settings and operational parameters of the response. In another words, the HTTP header is a set of fields containing supplemental information about the user request or server response.

From the example above, the “Response Headers” contain several fields with information about the server, content and encoding where the line

Content-Type: text/html; charset=utf-8informs the browser that characters in the document are encoded using UTF-8 charset.

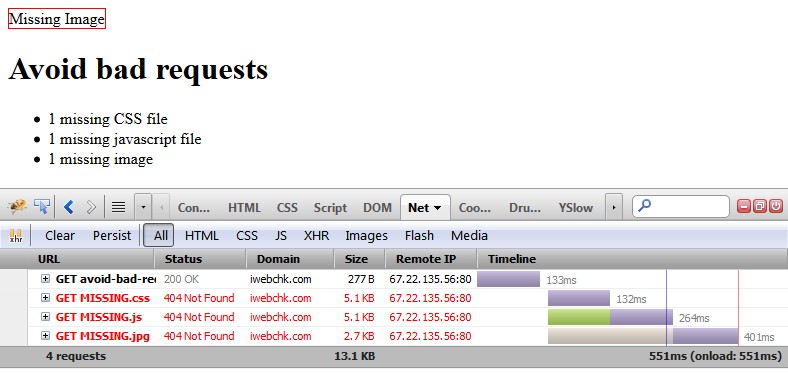

Avoid Bad Requests to Speedup Your Webpages

Published to Performance, SEO on .What are “Bad Requests”?

Bad requests are the type of HTTP requests that results in “404 Not Found” or “410 Gone” errors. This most commonly happens when user access a broken or dead link and since the webpage does not exists on the server this error message is generated and returned to the user. However, it is possible to have a valid webpage that is working correctly yet it includes one or more “dead” resources. For example, there can be a reference to no longer existing JavaScript file or perhaps CSS file that has been move to a different location yet old link still remains. Sometimes there are images that no longer exists but are included on the page.

How to check for missing resources on the webpage?

The easiest way to check for missing resources on the webpage is to utilize your browser’s developer tools. Most modern browsers come with tool sets that allow to examine network traffic. The common way to access developer tools is to press “F12” button on your keyboard while browsing the webpage. My preferred way to analyze webpage resources is with Firebug which is a developer plugin for Firefox browser.

Why is it important to avoid bad requests?

Bad requests are often quite a resource hug. To review the effects of dead resources on a webpage we have created a very simple test page with references to one missing style sheet CSS file, one missing JavaScript file and one bad image:

First noticeable item in the traffic analysis of the page is the size of the 404 No Found responses which are not small in comparison to our tiny test page that is only 277 bytes. Depending on the server and website configuration the size of the error page will vary but it will usually be at least several kilobytes in size as the response usually will consists of headers and text or HTML code with the explanation of the error. If you have a fancy custom 404 error page which is large in size, the difference would be even more dramatic. Removing references to missing resources definitely will decrease bandwidth usage.

New and Improved Navigation for Google Webmaster Tools

Published to Technology, Tools on .Google webmaster tools team have just rolled out new and improved navigation. The new organization separates features according to the search stages which are crawling, indexing and serving:

- Crawl section containing information about how Google crawls and discovers data. There you can find information about crawl errors, stats, blocked URLs, sitemaps and URL Parameters. Under this section you can also use the “Fetch as Google”

- Google Index section contains information about index status, content keywords and has option to remove URLs

- Search Traffic section lists searched queries, backlinks and internal links.

There is also new “Search Appearance” section with a nice popup showing search appearance overview with detailed explanation of each element:

Under Search Appearance section you can also find information pretending to the popup such as structured data of your content, data highlighter, HTML improvements and sitelinks.

Optimize Page Speed For Better SEO and User Experience

Published to Performance, SEO on .In April of 2010, Google announced that they include site speed as one of the signals in their search ranking algorithms. The reasoning behind that decision was rather simple “Faster sites create happy users”. Google, even did some research to back the obvious that users prefer faster websites. For a visitor of a website the speed is no doubt an important factor to the overall user experience, but according to the head of Google’s Webspam team, Matt Cutts, site speed plays only a minuscule role in Google search ranking algorithms and perishes in comparison to relevancy factors:

Google estimates that less than 1 out of 100 queries are impacted by the site speed factor. Nevertheless, a fast website is extremely important in a broader picture. Well optimized web pages preserve server resources, improve user experience and ensure that the website will not be penalized by Google’s page speed check.

How To Fix Website Blocked By Google Safe Browsing

Published to Security on .What is safe browsing?

“Safe Browsing is a service provided by Google that enables applications to check URLs against Google’s constantly updated lists of suspected phishing and malware pages.” (https://developers.google.com/safe-browsing/)

What happens if my site is flagged as suspicious?

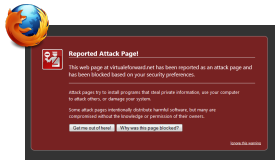

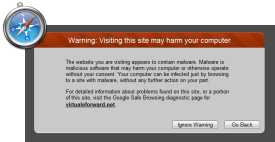

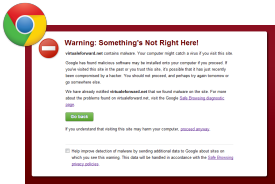

It is possible that your website has been hacked and it is used for phishing attacks or to distribute malware. In this case your website is blacklisted and will be blocked by Firefox, Safari and Chrome browsers presenting users with a very alarming warning message:

|

Reported Attack Page!This web page at [WEBSITE] has been reported as an attack page and has been blocked based on your security preferences. Attack pages try to install programs that steal private information, use your computer to attack others, or damage your system.Some attack pages intentionally distribute harmful software, but many are compromised without the knowledge or permission of their owners. |

|

Warning: Visiting this site may harm your computerThe website you are visiting appears to contain malware. Malware is malicious software that may harm your computer or otherwise operate without your consent. Your computer can be infected just by browsing to a site with malware, without any further action on your part. |

|

Warning: Something’s Not Right Here![WEBSITE] contains content from [WEBSITE], a site known to distribute malware. Your computer might catch a virus if you visit this site. Google has found malicious software may be installed onto your computer if you proceed. If you’ve visited this site in the past or you trust this site, it’s possible that it has just recently been compromised by a hacker. You should not proceed, and perhaps try again tomorrow or go somewhere else. |

Keyword Density and Consistency in SEO

Published to SEO Content on .Keyword density and consistency are notable factors for optimal page SEO. Preferred keywords should have higher keyword density indicating their importance. Optimally, targeted keywords should be consistently utilized in multiple essential areas of the page such as title, description meta tag, h1 through h6 headings, alt image attributes, backlinks and internal links anchor text.

It is appropriate to note that the keyword density and consistency has decreased its importance over the years due to keyword spamming and stuffing techniques. Also, over time keyword density has proven to be a rather poor measure of relevancy all together and search engines developed more sophisticated ways of understanding page content. Nevertheless, keywords are still an essential part of search engine optimization and should not be overlooked.

How to decide on your keywords?

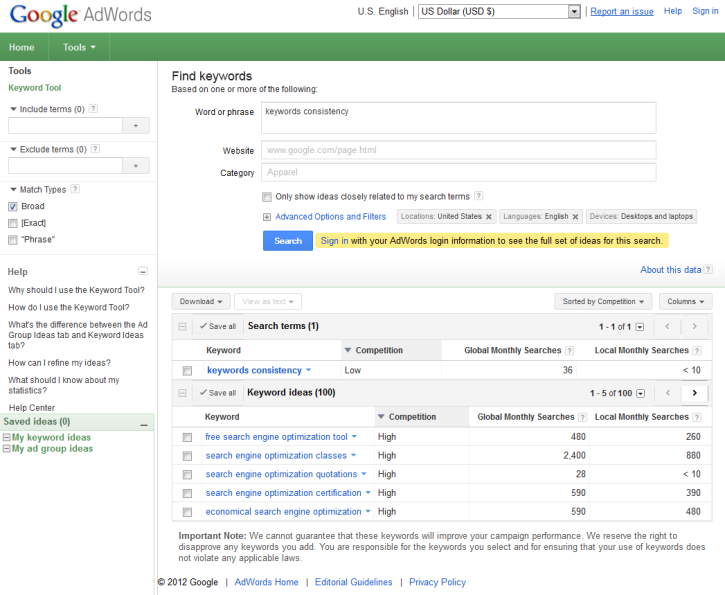

Each page should have its own set of important keywords and phrases, and those words should always be very relevant to the page content. Deciding on the right keywords can sometimes be a difficult task, fortunately, Google provides a tool for finding keyword related word searches which I have found quite helpful on numerous occasions: https://adwords.google.com/o/KeywordTool

With a keyword tool you can evaluate effectiveness of a targeted phrase by reviewing the average number of global or local monthly searches as well as checking on various keyword ideas.

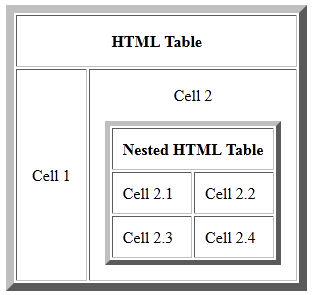

Why Not To Use Nested HTML Tables

Published to Accessibility, Technology on . In a past, utilizing HTML tables for layout was a common practice. Although, Cascading Style Sheets (CSS) have been developed by World Wide Web Consortium in 1996, the technology was not fully adopted until 2004, about the time when CSS 2.1 working draft was proposed. Before then, many browsers supported CSS 1 and CSS2 but the actual implementation and support was often incomplete and buggy. Today, most modern browsers support CSS3 and all major browsers support CSS2 and CSS2.1 specifications therefore there is no reason not to utilize CSS for your website’s layout.

In a past, utilizing HTML tables for layout was a common practice. Although, Cascading Style Sheets (CSS) have been developed by World Wide Web Consortium in 1996, the technology was not fully adopted until 2004, about the time when CSS 2.1 working draft was proposed. Before then, many browsers supported CSS 1 and CSS2 but the actual implementation and support was often incomplete and buggy. Today, most modern browsers support CSS3 and all major browsers support CSS2 and CSS2.1 specifications therefore there is no reason not to utilize CSS for your website’s layout.